|

I am a research scientist at Meta Reality Labs in Redmond, Washington. I work on computer vision and machine learning for applications in computational photography. I completed my PhD at Brown where I was advised by James Tompkin. I received a Fulbright Scholarship for my Masters at the Courant Institute of New York University where my Master's thesis was advised by Ken Perlin. |

|

|

|

|

Chieh Hubert Lin, Zhaoyang Lv, Songyin Wu, Zhen Xu, Thu Nguyen-Phuoc, Hung-Yu Tseng, Julian Straub, Numair Khan, Lei Xiao, Ming-Hsuan Yang, Yuheng Ren, Richard Newcombe, Zhao Dong, Zhenqin Li, NeurIPS, 2025 arXiv / project page / bibtex We introduce the first feed-forward method predicting deformable 3D Gaussian splats from a monocular posed video of any dynamic scene. |

|

Hyunho Ha, Lei Xiao, Christian Richardt, Thu Nguyen-Phuoc, Changil Kim, Min H. Kim, Douglas Lanman, Numair Khan, CVPR, 2025 arXiv / project page / bibtex We present real-time-capable method for streaming temporally-consistent 6-DoF video from multi-camera input. |

|

Yiqing Liang, Numair Khan, Zhenqin Li, Thu Nguyen-Phuoc, Douglas Lanman, James Tompkin, Lei Xiao WACV, 2025 arXiv / project page / code / bibtex We propose a method for dynamic scene reconstruction based on a deformable set of 3D Gaussians residing in a canonical space, and a time-dependent deformation field defined by a multi-layer perceptron (MLP) |

|

Edward Bartrum, Thu Nguyen-Phuoc, Chris Xie, Zhenqin Li, Numair Khan, Armen Avetisyan, Douglas Lanman, Lei Xiao NeurIPS, 2024 arXiv / project page / bibtex We introduce a novel text-guided 3D scene editing method that enables the replacement of specific objects within a scene. |

|

Yu-Ying Yeh, Jia-Bin Huang, Changil Kim, Lei Xiao, Thu Nguyen-Phuoc, Numair Khan, Cheng Zhang, Manmohan Chandrakar, Carl Marshall, Zhao Dong, Zhenqin Li CVPR, 2024 arXiv / project page / bibtex We present a novel image-guided texture synthesis method to transfer relightable textures from a small number of input images to target 3D shapes across arbitrary categories. |

|

Numair Khan, Douglas Lanman, Lei Xiao ICCV, 2023 arXiv / code / bibtex We propose a method for generating tiled multiplane images with only a small number of adaptive depth planes for single-view 3D photography in the wild. |

|

Numair Khan, Eric Penner, Douglas Lanman, Lei Xiao CVPR, 2023 arXiv / code / bibtex We aim to estimate temporally consistent depth maps of video streams in an online setting by using a global point cloud along with a learned fusion approach in image space. |

|

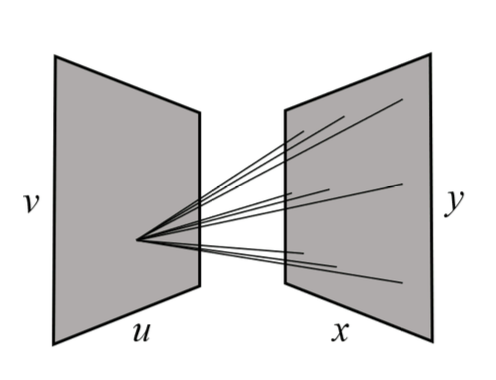

Numair Khan, Min H. Kim, James Tompkin International Journal of Computer Vision (IJCV), 2023 paper / bibtex This work shows that multi-view edges can encode all relevant information for depth reconstruction. For this, we follow Elder’s complementary work on the completeness of 2D edges for image reconstruction. |

|

Yiheng Xie, Towaki Takikawa, Shunsuke Saito, Or Litany, Shiqin Yan, Numair Khan, Federico Tombari, James Tompkin, Vincent Sitzmann, Srinath Sridhar Eurographics State-of-the-Art Report, 2022 project page / website / arXiv We present a comprehensive review of neural fields by providing context, mathematical grounding, and an extensive literature review. A companion website contributes a living version that can be continually updated by the community. |

|

Numair Khan, Min H. Kim, James Tompkin CVPR, 2021 project page / code / arXiv / bibtex A method to estimate dense depth by optimizing a sparse set of points such that their diffusion into a depth map minimizes a multi-view reprojection error from RGB supervision. |

|

Numair Khan, Min H. Kim, James Tompkin BMVC, 2021 arXiv / project page / code / bibtex We present an algorithm to estimate fast and accurate depth maps from light fields via a sparse set of depth edges and gradients. |

|

Numair Khan, Min H. Kim, James Tompkin BMVC, 2020 arXiv / project page / code / bibtex We propose a method to compute depth maps for every sub-aperture image in a light field in a view-consistent way. |

|

Numair Khan, Qian Zhang, Lucas Kasser, Henry Stone, Min H. Kim, James Tompkin ICCV, 2019 (Oral Presentation) paper / code / bibtex We use occlusion-aware angular segmentation of an Epipolar Plane Image (EPI) to generate light field superpixels that are consistent across views. |

|

Numair Khan, Anis Ur Rahman International Journal of Human-Computer Interaction (IJHCI), 2017 paper / bibtex We propose landmark-based verbal directions as an alternative to mini-maps, and examine the development of spatial knowledge in an open-world urban game environment. |

|

Mehwish Nasim, Aimal Rextin, Shumaila Hayat, Numair Khan, Mudassir Malik Pervasive and Mobile Computing, 2017 paper / bibtex Predicting outgoing mobile phone calls using machine learning and time clusters-based approaches. |

|

Numair Khan, Mohamed Zahran International Parallel and Distributed Processing Symposium Workshops (IPDPSW), 2017 paper / bibtex The distance transform is decomposed into a map-and-reduction pattern for efficient computation on GPUs. |

|

Mehwish Nasim, Aimal Rextin, Numair Khan, Mudassir Malik International Conference on Human-Computer Interaction with Mobile Devices and Services (MobileHCI), 2016 paper / bibtex In this measurement study, we analyze whether mobile phone users exhibit temporal regularity in their mobile communication. |

|

|

|

In Search of a Strategy Against Misinformation

I, Entrepreneur The Essentials of a Computer Scientist's Toolkit |

|

Teaching Assistant, CSCI 1290 - Computational Photography, Fall 2020

Teaching Assistant, CSCI 1290 - Computational Photography, Fall 2018 Teaching Assistant, CSCI 2240 - Interactive Computer Graphics, Spring 2018 |

|

Instructor, Advanced Programming Spring 2016

Instructor, Operating Systems Fall 2015 |

|

The source code for this website was copied from Jon Barron's website. It is freely available for personal use here. |